Privacy Canada is community-supported. We may earn a commission when make a purchase through one of our links. Learn more.

Big O Notation

Big O Notation is a way of expressing the computational difficulty of a certain computation or algorithm. It is not neccesarily a measure of how much time the calculation would take, but rather how efficient it is in terms of how many calculations it must make to execute – called the time complexity. More technically, Big O Notation is a measure of how well an algorithm scales performance wise as the amount of data increases. First, let’s take a look at what Big O Notation looks like.

Big O notation generally contains two numbers – n, which is the size of the dataset, and generally another symbol such as an exponent, log, or coefficient multiplying with n. In this way, the result of the O(n) expression is how many calculations must be performed based on the input data size n. Let’s take a look at the first one. O(N), spoken as order of n, is an example of linear time. This means that the time complexity of the algorithm increases linearly with the amount of data. So if the algorithm took roughly 5 seconds to deal with 5 amounts of data, it would be realistic to expect it to take 10 seconds for 10 amounts.

The second one (O(n^2)) is where things get more interesting. This is often the time complexity of algorithms that involve nested operations (a subset of operations for each individual operation for each amount of data). A common example of an algorithm with O(n^2) complexity is the Bubble Sort, an inefficient array sorting algorithm.

So what’s the difference between these two? O(n) is obviously more efficient than the squared O(n^2), we can tell that just by looking at it. How much more efficient is that though? How much faster would it complete? Well – It’s not quite that simple. The speed of O(n) is directly related to how much data is given in – which makes sense. O(n^2) however, increases exponentially (or in this case by the square) based on how much data it is given. From that you can figure that O(n^3) is even more inefficient, and would take exponentially longer than the other two, and so on.

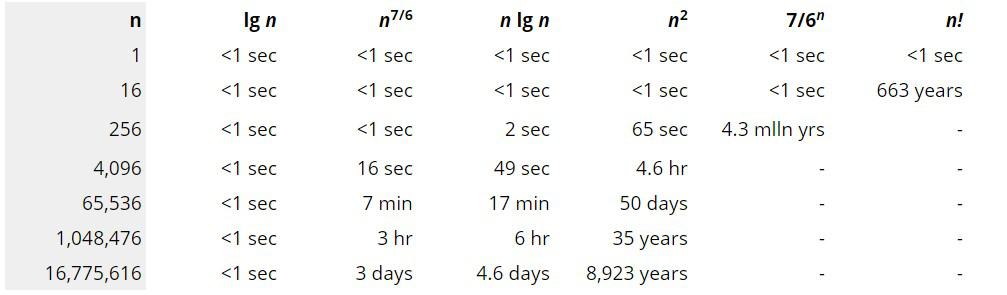

With modern processing power, the difference between these can be negligible with smaller data sets. Where it gets interesting is when you start giving algorithms that are exponentially less efficient than say a linear one. Here’s some sample execution times for a variety of different n values:

Algorithms with lower time complexity (such as O(n lg n), the third column) increase with bigger data sets, but only relative to their complexity. Whereas the more efficient algorithms can handle a data set size of 16 million in under a period of a few days, it very quickly exponentially grows to millions of years (and eventually to numbers too big to list here). Big O Notation is a convenient way to express the complexity and computational complexity of a particular algorithm without having to dive deep into how the algorithm works – you can just say it operates in O(n!) time, and people can immediately know if they can throw a big data set in and expect your algorithm to be able to handle it within a reasonable time period, or if they’ll be waiting a few centuries before it finishes.